Deploy your enterprise LLM on your infrastructure—with unlimited usage.

Run LLM agents on your servers or VPC. Keep your data private, meet IT compliance, and eliminate token-billing uncertainty.

Trusted by Leading Enterprises

Global organizations transforming their operations with enterprise AI

How to choose On-Prem LLM

We fine-tune and optimize models specifically for your organization's unique requirements, ensuring maximum performance and relevance for your use cases

Continuous Open Source LLM Evaluation

Our expert team continuously benchmarks and evaluates the latest open source models across quality metrics and serving performance to deliver optimal on-premise solutions.

Comprehensive testing of leading open source LLMs

AI Index, MMLU, GPQA, and domain-specific benchmarks

Continuous monitoring of throughput, latency, and resource utilization

Expert-Driven Model Selection & Optimization

Our specialized AI infrastructure team brings deep expertise in model evaluation, quantization strategies, and production deployment—ensuring you get the most performant and cost-effective solution for your enterprise needs.

On-prem / dedicated infrastructure

Runs within your perimeter: data center, VPC or private cloud.

Optional unlimited usage

Fixed price per capacity/server instead of per-token billing.

Governance & audit

Role-based controls, logs and citations for traceability from day 1.

Agentic RAG

Smart retrieval with agents that verify and cite sources.

Pilot in weeks

Fast setup with 1-2 workflows in 4-8 weeks.

Regulatory compliance

SSO, RBAC, encryption and PII handling for regulated sectors.

How it works — practical architecture

Privacy-focused pipeline: secure ingestion → indexing → Agentic RAG + on-prem LLM → auditable results

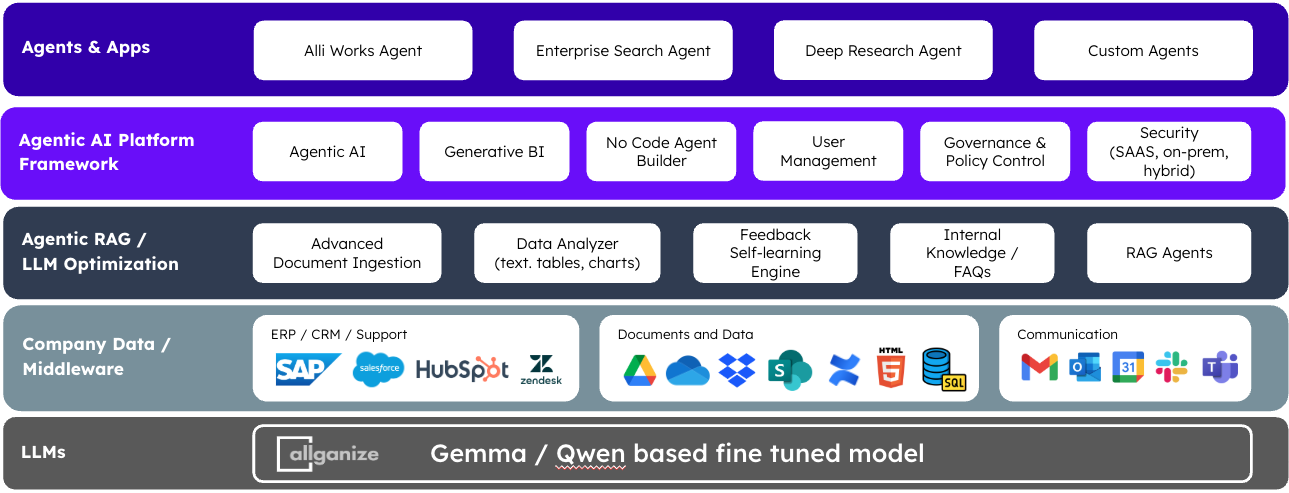

Architecture

Sources

Documents, tickets, CRM, meeting notes and policies.

Secure ingestion

PII control, roles and logs.

Agentic RAG

Retrieval and reasoning with traceable citations.

Use cases

Document Intelligence & Automation

Automated contract analysis, RFP responses, and bid comparison. Reduce processing time by 40-60%

Knowledge Management & Compliance

Policy Q&A with role-based access control and instant regulatory answers with citations

Customer Service & Support

Intelligent ticket routing and automated responses. Achieve 50-70% faster resolution times

Sales & Revenue Intelligence

CRM data analysis, meeting notes extraction, and AI-powered forecast prediction

Frequently Asked Questions (FAQ)

Everything you need to know about on-premise LLM for regulated enterprises

Overview

Technical & Infrastructure

Security & Compliance

Use Cases & Operations

Pricing & Commercial

Pilot & Success Metrics

Deployment Options

Choose the infrastructure that best fits your security and scalability needs

Multi-Tenant SaaS

Shared cloud infrastructure managed by Allganize. Fast deployment with instant updates and on-demand scaling.

Single-Tenant SaaS

Dedicated environment for a single customer. High performance, isolated security and full regional control (AWS/Azure).

On-Premise

Complete installation on customer hardware or private cloud. Ideal for high-security environments with air-gapped requirements.

| Feature | Multi-Tenant SaaS | Single-Tenant SaaS | On-Premise |

|---|---|---|---|

| CSP Options | Fixed (AWS / Azure) | Customer Choice (AWS/Azure) | Private Cloud / On-Premises |

| Regional Control | AWS (US) / Azure (Japan) | Customer Selected Region | Fully Managed by Customer |

| BYOC Support | Not Available | Available | N/A (License Model) |

| Setup Fee | Included | $10k | $50k |

Infrastructure & Technical Matrix

Schedule a demo

See how we can help with your on-premise or single-tenant SaaS deployment in 30 minutes.

Quick setup

Functional pilot in 4-8 weeks with complete support

No risk

No-commitment evaluation, clear success metrics

Dedicated support

Technical guidance throughout the process